After the novel was published, I accepted a university teaching job in creative writing, and finally gave up the professional freelance computer work. It had served me well. Now it was time to write.

I found, soon enough, that although I may have stopped chasing the fat consulting paychecks, the impulse to program had not left me. The work of making software gave me a little jolt of joy each time a piece of code worked; when something wasn’t working, when the problem resisted and made me rotate the contours of the conundrum in my mind, the world fell away, my body vanished, time receded. And three or five hours later, when the pieces of the problem came together just so and clicked into a solution, I surfed a swelling wave of endorphins. On the programming section of Reddit, a popular social news site, a beginner posted a picture of his first working program with the caption, “For most of you, this is surely child [sic] play, but holy shit, this must be what it feels like to do heroin for the first time.”2 Even after you are long past your first “Hello, world!” there is an infinity of things to learn, you are still a child, and — if you aren’t burned out by software delivery deadlines and management-mandated all-nighters — coding is still play. You can slam this pleasure spike into your veins again and again, and you want more, and more, and more. It’s mostly a benign addiction, except for the increased risks of weight gain, carpal tunnel syndrome, bad posture, and reckless spending on programming tools you don’t really need but absolutely must have.

So I indulged myself and puttered around and made little utilities, and grading systems, and suchlike. I was writing fiction steadily, but I found that the stark determinisms of code were a welcome relief from the ambiguities of literary narrative. By the end of a morning of writing, I was eager for the pleasures of programming. Maybe because I no longer had to deliver finished applications and had time to reflect, I realized that I had no idea what my code actually did. That is, I worked within a certain language and formal system of rules, I knew how the syntax of this language could be arranged to affect changes in a series of metaphors — the “file system,” the “desktop,” “Windows”—but the best understanding I had of what existed under these conceptualizations was a steampunk-ish series of gearwheels taken from illustrations of Charles Babbage’s Difference Engine. So now I made an attempt to get closer to the metal, to follow the effects of my code down into the machine.

3 THE LANGUAGE OF LOGIC

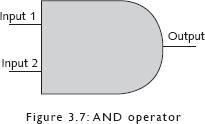

The seven lines of the “Hello, world!” code at the beginning of this book — written in Microsoft’s C# language — do nothing until they are swallowed and munched by a specialized program called a compiler, which translates them into thirty-odd lines of “Common Intermediate Language” (CIL) that look like this:

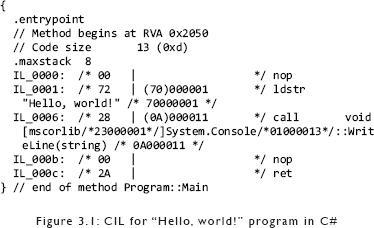

This, as the name of the language indicates, is a mediating dialect between human and machine. You could write a “Hello, world!” program in another Microsoft language like Visual Basic and get almost exactly the same listing, which is how the program is stored on disk, ready to run. When you do run it, the CIL is converted yet again, this time into machine code:

Now we’re really close to computing something, but not quite yet. Machine code is actually a really low-level programming language which encodes one or more instructions as numbers. The numbers are displayed above in a hexadecimal format, which is easier for humans to read than the binary numbers (“101010110001011 …”) sent to the computer’s central processing unit (CPU). This CPU is able to accept these numbers, each of which represents an instruction native to that particular type of CPU; the CPU reacts to each number by tripping its logic gates, which is to say that a lot of physical changes cascade in a purely mechanical fashion through the chips and platters in that box on your desk, and “Hello, world!” appears on your screen.

But, but — what are “logic gates”? Before I began my investigation of the mechanics of computing, this phrase evoked some fuzzy images of ones and zeros and intricate circuits, but I had no idea how all of this worked together to produce “Hello, world!” on my screen. This is true of the vast majority of people in the world. Each year, I ask a classroom of undergraduate students at Berkeley if they can describe how a logic gate works, and usually out of about a hundred-odd juniors and seniors, I get one or two who are able to answer in the affirmative, and typically these are computer science or engineering majors. There are IT professionals who don’t know how computers really work; I certainly was one of them, and here is “Rob P.” on the “programmers” section of stackexchange.com, a popular question-and-answer site:

This is almost embarrassing [to] ask … I have a degree in Computer Science (and a second one in progress). I’ve worked as a full-time.NET Developer for nearly five years. I generally seem competent at what I do.

But I Don’t Know How Computers Work! [Emphasis in the original.]

I know there are components … the power supply, the motherboard, ram, CPU, etc … and I get the “general idea” of what they do. But I really don’t understand how you go from a line of code like Console.Readline() in.NET (or Java or C++) and have it actually do stuff.1

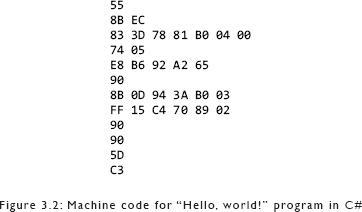

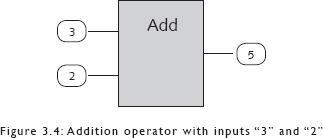

How logic gates “do stuff” is dazzlingly simple. But before we get to their elegant workings, a little primer on terminology: you will remember that the plus sign in mathematical notation (as in “2 + 3”) can be referred to as the “addition operator.” The minus sign is similarly the “subtraction operator,” the forward slash is the “division operator,” and so on. Mostly, we non-mathematicians treat the operators as convenient, almost-invisible markers that tell us which particular kindergarten-vintage practice we should apply to the all-important digits on either side of themselves. But there is another way to think about operators: as functions that consume the digits and output a result. Perhaps you could visualize the addition operator as a little machine like this, which accepts inputs on the left and produces the output on the right:

So if you give this “Add” machine the inputs “3” and “2,” it will produce the result “5.”

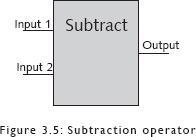

A “Subtract” operator might be imagined like this:

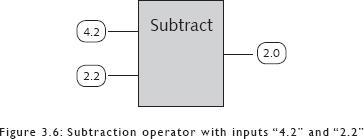

So, giving this “Subtract” operator a first input of “4.2” and a second input of “2.2” will cause it to output “2.0.”

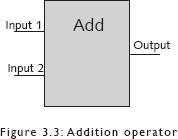

The mathematical addition and subtraction operators above act on numbers, and only on numbers. In his 1847 monograph, The Mathematical Analysis of Logic, George Boole proposed a similar algebra for logic. In 1854, he corrected and extended these ideas about the application of symbolic algebra to logic with a seminal book, An Investigation of the Laws of Thought, on Which Are Founded the Mathematical Theories of Logic and Probabilities. In “Boolean algebra,” the only legal inputs and outputs are the logical values “true” and “false”—nothing else, just “true” and “false.” The operators which act on these logical inputs are logical functions such as AND (conjunction), OR (disjunction), and NOT (negation). So the logical AND operator might look like this: